- 10 Posts

- 119 Comments

The Developer ID certificate is the digital signature macOS uses to verify legitimate software. The certificate that Logitech allowed to lapse was being used to secure inter-process communications, which resulted in the software not being able to start successfully, in some cases leading to an endless boot loop.

This is 100% on Apple users for letting a company decide what their computer can and can’t run. And then brag about its security like it has some super special zero trust architecture and is not just a walled garden with a single point of failure dependent on opaque decision making criteria for what code should be “allowed” to run on the system.

Key and signature based security model does not prove if it’s safe, it proves if it’s approved. They’re not the same.

Macs don’t get malware. Unless it’s malware Apple approves, those are called apps.

brings the dream of endless energy even closer

Yeah that’s not “close” until we figure out how to do fusion with regular hydrogen, you know, like the sun. When your fusion requires unstable and/or extremely rare isotopes it’s not even going to be viable compared to fission, let alone solving our energy problem.

He could put in a free ticket to get a free headset, or just make everyone’s life more difficult every single time they interact with him.

Hey, so have you or any of your coworkers ever voiced your concerns to him? Most people aren’t recording themselves through their setup just to see how it sounds unless they’re a professional announcer or something.

If you haven’t, you’re judging him for poor communication on your part.

Damn, 13 BILLION years. That’s a good percentage of the total lifetime of the solar system. Store an archive of all our mathematics, science, engineering, and programming knowkedge on one of those and it might end up being what we’ll give the other animals that might evolve intelligence after we go extinct. We can only hope they use the knowledge better than we did.

German firms

As in the German bourgeoisie.

Make no mistake, German “firms” are not and never will be China’s “friends.” They’re not even Germany’s friends. This is just a further extension of the West’s outsourcing of labour to China, a decidedly one sided relationship where any benefit to China is a happy accident at best and a terrible side effect at worst in the eyes of German businesspeople.

Because .world definitely uses Cloudflare? https://checkforcloudflare.selesti.com/?q=lemmy.world

This is only a problem if the datacenters are not powered by renewables. Nearly all renewables are the result of solar energy, AKA photons, AKA heat that is hitting the Earth regardless of what we do with it. Solar panels are obvious, but even for wind, it’s the result of the sun heating different regions of the atmosphere at different rates, converting thermal energy from the sun to kinetic energy in the air. A wind turbine converts some of this kinetic energy to electrical energy (which slows the air down ever so slightly), which is dissipated as heat mostly in the data center. The thing is, if the wind turbine didn’t exist, whatever kinetic energy that would have been captured would directly be dissipated as heat anyway in the form of friction in the air. Renewables only move solar heat around, and doesn’t generate heat of its own. Even with geothermal energy, where in theory you’re bringing heat that was trapped in the Earth to the surface, the geothermal sources we can practically take advantage of are already so close to the surface they would have been released through simple conduction anyway.

It is only when you burn fossil fuels that you’re actively generating heat that would otherwise have stayed as chemical energy. But even the heat from this not the actual concern, it’s the byproducts it generates that cause solar energy that would have been released into space to be trapped in the atmosphere. The heat generated isn’t even a rounding error compared to retaining even 1% more solar energy in the air. Same for nuclear where you’re reducing the overall binding energy inside atom cores and the reduction in energy is equal to the heat generated.

Why put them in the ocean when you can just put them on the coast and pipe ocean water through the heat exchanger? That way you can actually access the servers without a ship with a giant crane (powered by fossil fuels) hauling them back up.

Also gonna guess the maintenance intervals are atrocious with all the salt corrosion. Why not a river or lake where the water doesn’t actively hate the thought of metals existing and you don’t have microscopic creatures that will attach to literally any surface and create a calcified dome for itself plugging up the places water is supposed to flow through?

I was baffled by the Microsoft “sea cooled datacenter” and I’m still baffled now. Like surely there are better ways to do it.

Let me guess, only the big name authors and none of the countless people posting their writing projects to the internet (which probably accounted for a way higher precentage of the training data than published novels given how much more of it there is) or the people having back and fourth discussions on Reddit (which was likely vital to ensuring the AI responded to technical conversations in a normal sounding way).

The thing I hate most about the copyright system is how blatantly it helps the biggest creators concentrate wealth while actively excluding smaller and amateur creators. The copyright system is the barrier to entry. You can only exercise the rights theoretically given to every single creator if you make enough money from your art, but to make enough money from your art you need to be able to exercise the rights to it.

US libs: “PEOPLE IN COMMUNIST CHINA ARE FORCED TO USE SLANG WHEN TALKING ABOUT CHINESE ATROCITIES TO GET PAST CENSORSHIP!!”

Also US libs: “Unalive” “Music Festival” Bleeping out Palestine “This is fine and way better than communist China”

Also interesting how Tiktok only started doing this after they were forcibly sold off from the Chinese parent company and was forced to conform to “Western values” eh?

English

English- •

- 8M

- •

English

English- •

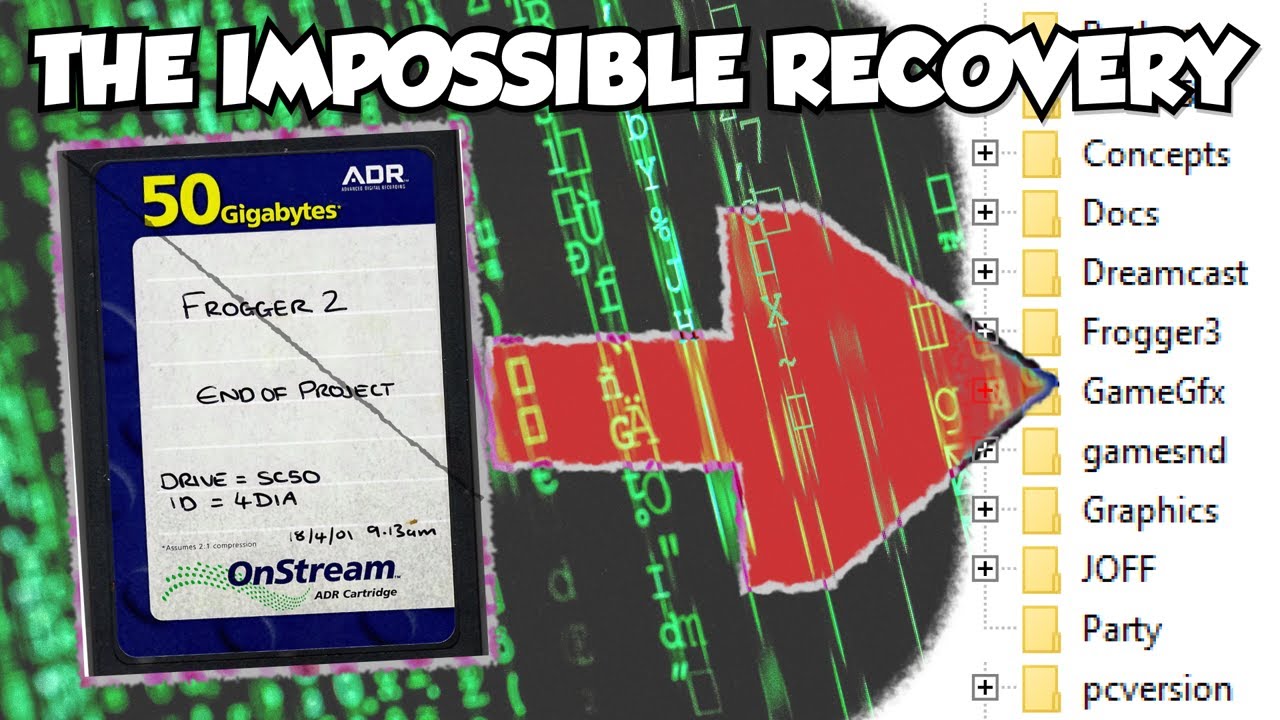

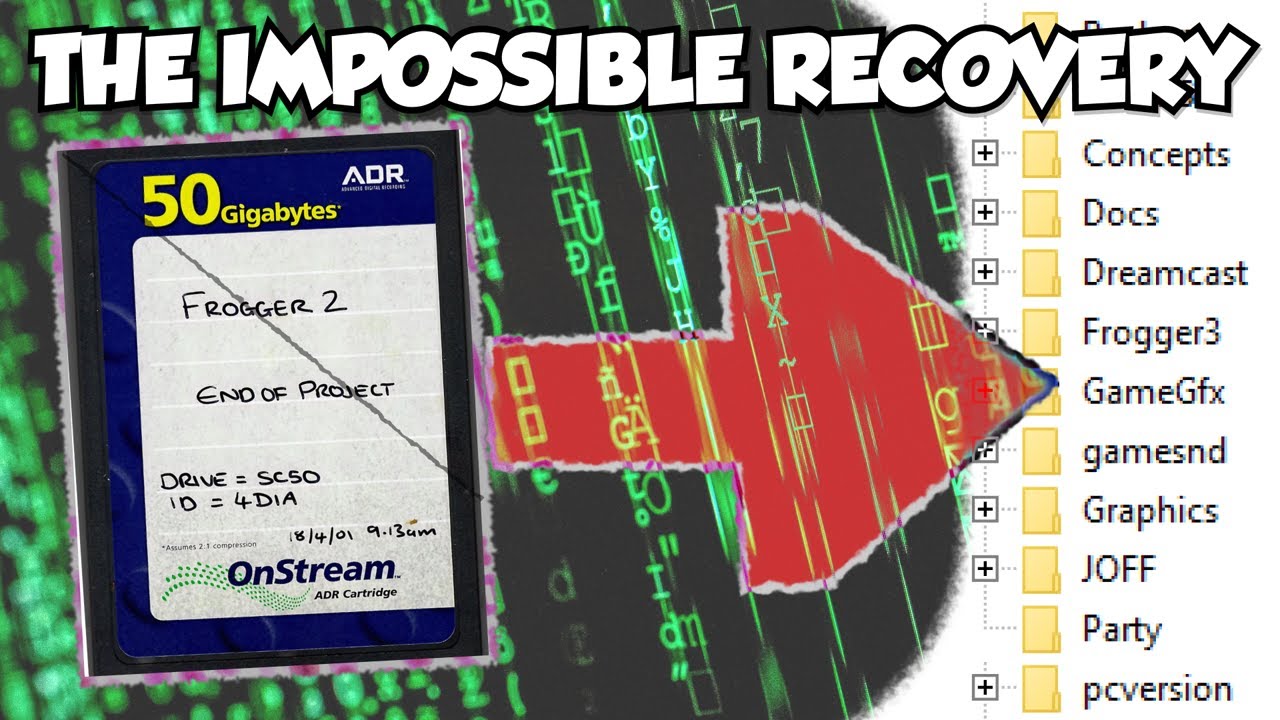

- youtube.com

- •

- 1Y

- •

English

English- •

- lemmy.ml

- •

- 1Y

- •

Slop CEO says what?