- 4 Posts

- 78 Comments

What emerging players? You can’t just whip up a competitive GPU in a jiffy, even if you have Intel money.

Also, unless they are from a different planet that has its own independent supply chain, they’d have to deal with the very same memory shortage and the very same foundries that are booked out for years.

Which is totally fine. Not every game has to support older hardware. Games are allowed to use “newer” tech.

Worth noting that I played Indy at 1600p/60 on an RTX 2080, which is a card from 2018 that I bought used for 200 bucks two years ago. This card can still run every single game out there and most of them extremely well, despite only having 8 GB of VRAM.

The whole debate is way overblown. This doesn’t mean that there aren’t games that could run a whole lot better, but overall, PC gamers with old hardware are still eating good.

Use supersampling. Either at the driver level (works with nearly all 3D games - enable the feature there, then select a higher than native resolution in-game) or directly in games that come with the feature (usually a resolution scaling option that goes beyond 100 percent). It’s very heavy on your GPU depending on the title, but the resulting image quality of turning several rendered pixels into one is sublime. Thin objects like power lines, as well as transparent textures like foliage, hair and chain-link fences benefit the most from this.

Always keep the limits of your hardware in mind though. Running a game at 2.75 or even four times the native resolution will have a serious impact on performance, even with last-gen stuff.

Emulators often have this feature as well, by the way - and here, it tends to hardly matter, since emulation is usually more CPU-bound (except with very tricky to emulate systems). Render resolution and output resolution are often separate. I’ve played old console games at 5K resolution, for example. Even ancient titles look magnificent like that.

I vaguely recall playing one of the two about 20 years ago (looking at the screenshots, I think it was the second game). It was a bonus game on a CD of some computer or gaming magazine. Even two decades ago and this shortly after release, it felt unbelievably dated and clunky already. The PC port was also complete garbage, with lots of bugs, awful visuals even by PS1 port standards and poor controls.

If you’re nostalgic for these games, they might be worth revisiting (although you’re probably remembering them being more impressive than they actually were), but if you’re not, I doubt they are worth picking up, even with the improvements from gog.

Just to compare these two to another dinosaur game from that era that received similarly poor reviews as the PC version of Dino Crisis, Trespasser was far more sophisticated and fun, in my opinion at least - and certainly a technical marvel by comparison. It’s not just that it’s fully 3D, with huge open areas (not possible on PS1, of course), but also the way it pioneered physics interaction. My favorite unscripted moment was a large bipedal dinosaur at the edge of the draw distance stumbling - possible thanks to the procedural animations - and bumping into the roof of a half-destroyed building, resulting in its collapse. That’s outrageous for 1998! I’ve only ever seen this happen once at this spot in the game, so it’s certainly not scripted.

Looking at the screenshots, I thought it was a port of a mid-gen PS4 game, but apparently, it’s a one year old former PS5 exclusive. Then again, this might explain the modest hardware requirements. You don’t see the minimum GPU on a AAA open world game being a GTX 1060 6 GB (a card from 2016) very often anymore. Perhaps it’ll run well on the Steam Deck, which is always appreciated. Reviews are solid enough that I might pick it up on sale.

Maxwell came out in 2014, Pascal in 2016 and Volta in 2017. Pretty long run for Maxwell in particular.

I was curious about the AMD side of things: They reduced support for Polaris and Vega (both 2017) in September 2023 already.

It’s based on the Xbox 360/PS3 console port of the game. People figured this out pretty quickly, because a VTOL flying mission that these consoles couldn’t handle was missing from the remaster as well (later added back in with a patch). Colors are oversaturated, texture, object and lighting quality are down- or sidegraded (many not worse on a purely technical level, but different without being better for no reason, as if the outsourced Russian devs had a quota of changed assets to fill), lots of smaller and larger physics interactions are gone, because they were never part of that old console port. AI (quite a bit selling factor on the original as well, on top of the graphics and physics) is simplified as well. The added sprinkles of ray-tracing features here and there, as well as some nicer water physics do not make up for the many visual and gameplay deficiencies. On top of that, there are game-breaking glitches that weren’t part of the original.

The biggest overall problem I have with it is that it just doesn’t look and feel like Crysis anymore and instead has the look of a generic tropical Unity Engine survival game. The original had a very distinct visual identity, a muted, realistic look, but with enough intentional artistic flourishes that made it more than just a groundbreaking attempt at photorealism. You can clearly see this if you compare the original hilltop sunrise to the remaster. Crysis also had an almost future-milsim-like approach to its gameplay that is now a shell of its former self.

I will admit that to the casual player, many of these differences are minor to unnoticeable. If you haven’t spent far too much time with the original, you’re unlikely to notice the vast majority of it and might just notice how ridiculously saturated everything looks.

At the very least you can still buy the original on PC. On gog, it’s easy to find, but on Steam, it’s hidden for some reason [insert speculation as to why here]: If you use Steam’s search function, only the remaster appears. You have to go to the store page of the stand-alone add-on Crysis Warhead (the only Crysis game that did not receive the remaster treatment, likely because it was never ported to console), which can be purchased in a bundle with the original as the Maximum Edition (this edition also does not appear in the search results): https://store.steampowered.com/sub/987/

Are you seriously suggesting that in the age of rage-fueled campaigns against any game that even dares to show a non-male in a positive light or permits the player to change pronouns user scores are reliable?

You yourself are bringing this culture war bullshit up as if it was valid criticism, which means I have a hard time taking anything you’re writing even remotely seriously.

Can we stop with the fake frame nonsense? They aren’t any less real than other frames created by your computer. This is no different from the countless other shortcuts games have been using for decades.

Also, input latency isn’t “sacrificed” for this. There is about 10 ms of overhang with 4x DLSS 4 frame gen, which however gets easily compensated for by the increase in frame rates.

The math is pretty simple on this: At 60 fps native, a new frame needs to be generated every 16.67 ms (1000 ms / 60). Leaving out latency from the rest of the hard- and software (since it varies a lot between different input and output devices and even from game to game, not to mention, there are many games where graphics and e.g. physics frame rate are different), this means that at three more frames generated per “non-fake” frame and we are seeing a new frame on screen every 4.17 ms (assuming the display can output 240 Hz). The system still accepts input and visibly moves the view port based on user input between “fake” frames using reprojection, a technique borrowed from VR (where older approaches are working exceptionally well already in my experience, even at otherwise unplayably low frame rates - but provided the game doesn’t freeze), which means that we arrive at 14.17 ms of latency with the overhang, but four times the amount of visual fluidity.

It’s even more striking at lower frame rates: Let’s assume a game is struggling to run at the desired settings and just about manages to achieve 30 fps (current example: Cyberpunk 2077 at RT Overdrive settings and 4K on a 5080). That’s one native frame every 33.33 ms. With three synthetic frames, we get one frame every 8.33 ms. Add 10 ms of input lag and we arrive at a total of 18.33 ms, close to the 16.67 ms input latency of native 60 fps. You can not tell me that this wouldn’t feel significantly more fluent to the player. I’m pretty certain you would actually prefer it over native 60 fps in a blind test, since the screen gets refreshed 120 times per second.

Keep in mind that the artifacts from previous generations of frame generation, like smearing and shimmering, are pretty much gone now, at least based on the footage I’ve seen, and frame pacing appears to be improved as well, so there really aren’t any downsides anymore.

Here’s the thing though: All of this remains optional. If you feel the need to be a purist about “real” and “fake frames”, nobody is stopping you from ignoring this setting in the options menu. Developers will however increasingly be using it, because it enables previously impossible to run higher settings on current hardware. No, that’s not laziness, it’s exploiting hardware and software capabilities, just like developers have always done it.

Obligatory disclaimer: My card is several generations behind (RTX 2080, which means I can’t use Nvidia’s frame gen at all, not even 2x, but I am benefiting from the new super resolution transformer and ray reconstruction) and I don’t plan on replacing it any time soon, since it’s more than powerful enough right now. I’ve been using a mix of Intel, AMD and Nvidia hardware for decades, depending on which suited my needs and budget at any given time, and I’ll continue to do use this vendor-agnostic approach. My current favorite combination is AMD for the CPU and Nvidia for the GPU, since I think it’s the best of both worlds right now, but this might change by the time I’m making the next substantial upgrade to my hardware.

You’re still seeing ray tracing as a graphics option instead of what it actually is: Something that makes game development considerably easier while at the same time dramatically improving lighting - provided it replaces rasterized graphics completely. Lighting levels the old-fashioned way is a royal pain in the butt, time- and labor-intensive, slow and error-prone. The rendering pipelines required to pull it off convincingly are a rat’s nest of shortcuts and arcane magic compared to the elegant simplicity of ray tracing.

In other words: It doesn’t matter that you don’t care about it, because in a few short years, the vast majority of 3D games will make use of it. The necessary install base of RT-capable GPUs and consoles is already there if you look at the Steam hardware survey, the PS5, Xbox Series and soon Switch 2. Hell, even phones are already shipping with GPUs that can do it at least a little.

Game developers have been waiting for this tech for decades, as has anyone who has ever gotten a taste of actually working with or otherwise experiencing it since the 1980s.

My personal “this is the future” moment was with the groundbreaking real-time ray tracing demo heaven seven demo from the year 2000:

https://pouet.net/prod.php?which=5

I was expecting it to happen much sooner though, by the mid to late 2000s at the latest, but rasterized graphics and the hardware that runs it were improving at a much faster pace. This demo runs in software, entirely on the CPU, which obviously had its limitations. I got another delicious taste of near real-time RT with Nvidia’s iRay rendering engine in the early 2010s, which could churn out complex scenes with PBR materials (instead of the simple, barely textured geometric shapes of heaven seven) at a rate of just a few seconds per frame on a decent GPU with CUDA, even in real-time on a top of the line card. Even running entreily on the CPU, this engine was as fast as a conventional CPU rasterizer. I would sometimes preach about just how this was a stepping stone towards this tech appearing in games, but people rarely believed me back then.

They shouldn’t have released a new architecture without dedicated AI accelerators as late as 2022 then, even though they had working AI accelerators at that point - which they were only selling to data centers. FSR 4 can’t be ported back to older AMD architectures for the same reason that DLSS can’t physically work on anything older than an RTX 20 series card (which came out in 2018, by the way). You can only get so much AI acceleration out of general-purpose cores.

AMD’s GPU division is the poster child for short-sighted conservatism in the tech industry and the results speak for themselves. What’s especially weird is that the dominant company is driving innovation (for now at least) while the underdog was trying to survive with brute forcing raster performance above all else, like we’re in some upside-down world. Normally, it’s the other way around. AMD have finally (maybe) caught up to one of Nvidia’s technologies from March of 2020, almost half a decade ago. Too bad they are 1) chasing a moving target and 2) have lost almost every other race in the GPU sphere as well, including the one for best raster performance. The fact that their upcoming generation is openly copying Nvidia’s naming scheme is not a good sign - you don’t do that when things are going well.

Things might change in the future and I hope for there finally being some competition in the GPU sector again, but for now, it’s not looking good and the recent announcements haven’t changed anything. A vocal minority of PC gamers dismissing ray tracing, upscaling and frame generation as a whole reflects neither what developers are doing nor how buyers are behaving - and the fact that AMD is finally trying to score in all of these areas tells us that the cries of fanboys were just that and not reflective of any reality. If the new generation of AMD GPUs ends up finally delivering decent ray tracing, upscaling and frame generation performance (which I hope, because fuck monopolies and those increasingly cringey leather jackets), I wonder if the same people will suddenly reverse their course and embrace these technologies. Or maybe I should stop worrying about fanboys.

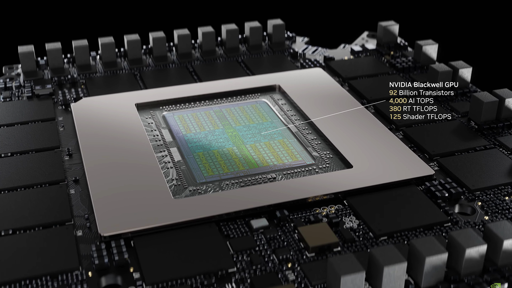

Nvidia is active in more than just one sector and love them or hate them, but they are dominating in consumer graphics cards (because they are by far the best option there, with both competitors tripping over their own shoes at nearly every turn), professional graphics cards (ditto), automotive electronics (ditto) and AI accelerators (ditto). The company made a number very correct and far-reaching bets on the future of GPU-accelerated computing a few decades ago, which are now all paying off big time. While I am critical of many if not most aspects of the current AI boom, I would not blame them for selling shovels during a gold rush. If there is one company in the world that has a business model built around AI right, it’s them. Even if e.g. the whole LLM bubble bursts tomorrow, they’ve made enough money to continue their dominance in other fields. A few of their other bets were correct too, like building actual productive and long-lasting relationships with game developers, spending far more on building decent drivers than anyone else and correctly predicting two industry trends very early on that are now both out in full force by making sure that their silicon puts a heavy emphasis on supporting both ray-tracing and upscaling. They were earlier than AMD and Intel, invested more resources into these hardware features while also providing better software support - and crucially, they also encouraged developers to make use of these hardware features, which is exactly the right approach. Yes, it would have been nicer of them to open source e.g. DLSS like AMD did with FSR, but the economic incentives aren’t there for this approach, unfortunately.

The marketing claim that the 5070 can keep up with the 4090 is a bit misleading, but there’s a method to the madness: While the three instead of just one synthetic frames created by the GPU are not 100% equivalent to natively rendered frames, the frame interpolation is both far better than it has been in the past from the looks of it (to the point that most people will probably not notice it) and has also now reached a point - thanks to motion reprojection similar to tech previously found on VR headsets, but now with screen edges being AI generated - where it has a positive impact on input latency instead of merely making games appear more fluent. Still, it would have been more honest to claim that the “low-end” (at $600 - thanks scalpers!) model of the new lineup is more realistically half as fast as the previous flag ship, but I guess they felt this wasn’t bombastic enough. Huang isn’t just an ass kisser, but also too boastful for his own good. The headlines wrote themselves though, which is likely why they were fine with bending the truth a little.

Yes, their prices are high, but if there’s one thing they learned during COVID, it’s that there are more than enough people willing and able to pay out of their noses for anything that outputs an image. If I can sell the same number of items for $600 than for half the price, then it makes no sense to sell them for less. Hell, it would even be legally dangerous for a company with this much market share.

I know this kind of upscaling and frame interpolation tech is unpopular with a vocal subset of the gaming community, but if there is one actually useful application of AI image generation, it’s using these approaches to make games run as well as they should. It’s not like overworked game developers can just magically materialize more frames otherwise - we would be more realistically back to FPS rates in the low 20s like during the early Xbox 360 and PS3 era rather than having everything run at 4K/120 natively. This tech is here to stay, downright needed to get around the diminishing returns paradigm that has been plaguing the games industry for a while, where every small advance in visual fidelity has to be paid with a high cost in processing power. I know, YOU don’t need fancy graphics, but as expensive and increasingly unsustainable as they are, they have been a main draw for the industry for almost as long as it has existed. Developers have always tried to make their games look as impressive as they possibly could with the hardware that is available - hell, many have even created hardware specifically for the games they wanted to make (that’s one way to sum up e.g. much of the history of arcade cabinets). Upscaling and frame generation are perhaps a stepping stone towards finally cracking that elusive photorealism barrier developers have been chasing for many decades once and for all.

The usual disclaimer before people accuse me of being a mindless corporate shill: I’m using AMD CPUs in most my PCs, I’m currently planning two builds with AMD CPUs, the Steam Deck shows just how great of an option even current AMD GPUs can be, I was on AMD GPUs for most of my gaming history until I made the switch to Nvidia when the PC version of GTA V came out, because back then, it was Nvidia who were offering more VRAM at competitive prices - and I wanted to try out doing stuff with CUDA, which is how they have managed to hold me captive ever since. My current GPU is an RTX 2080 (which I got used for a pittance - they haven’t seen any money from me directly since I bought a new GTX 960 for GTA V) and they can hype up the 50 series as much as they want with more or less misleading performance graphs, the ol’ 2080 is still doing more than fine enough at 1440p that I won’t be upgrading for many years to come.

Cool info, but I’m not sure about this part. Do you mean downsampling instead? Because chroma subsampling doesn’t make sense in this context.